TL;DR

We introduce the Intent Router, an SLM model that captures the intent of a query and classifies it into a specific route. This lightweight model is significantly more cost-effective and consistently faster than generic LLMs like GPT-4o, while maintaining high accuracy. You can try it for free. For customized solutions, including fine-tuning or on-premises deployment, please contact us.

The Intent Classification Task

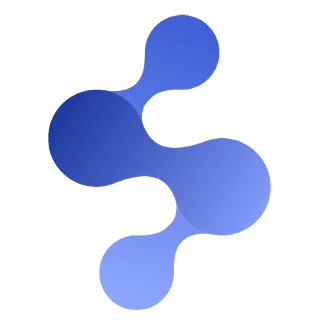

The intent classification task is to categorize a given query based on its underlying intent. The query is classified into one of several options, which we refer to as “routes.” For example, the query “How do neural networks work in machine learning?” could be classified as the “Computer Science” route.

We’ve developed the Intent Router to address this task. It receives a natural language query along with a set of routes and then selects the route that matches the intent of the query. A route essentially consists of a name and a description, with the option to include example utterances for in-context few-shot learning. Providing utterances enables to enhance accuracy and reflect the developer’s intent. For instance, the route “Computer Science” could include an utterance like “How does deep learning work?” to increase the possibility that similar queries are routed correctly.

Where to Use the Intent Router?

Here are three scenarios where the Intent Router can be useful:

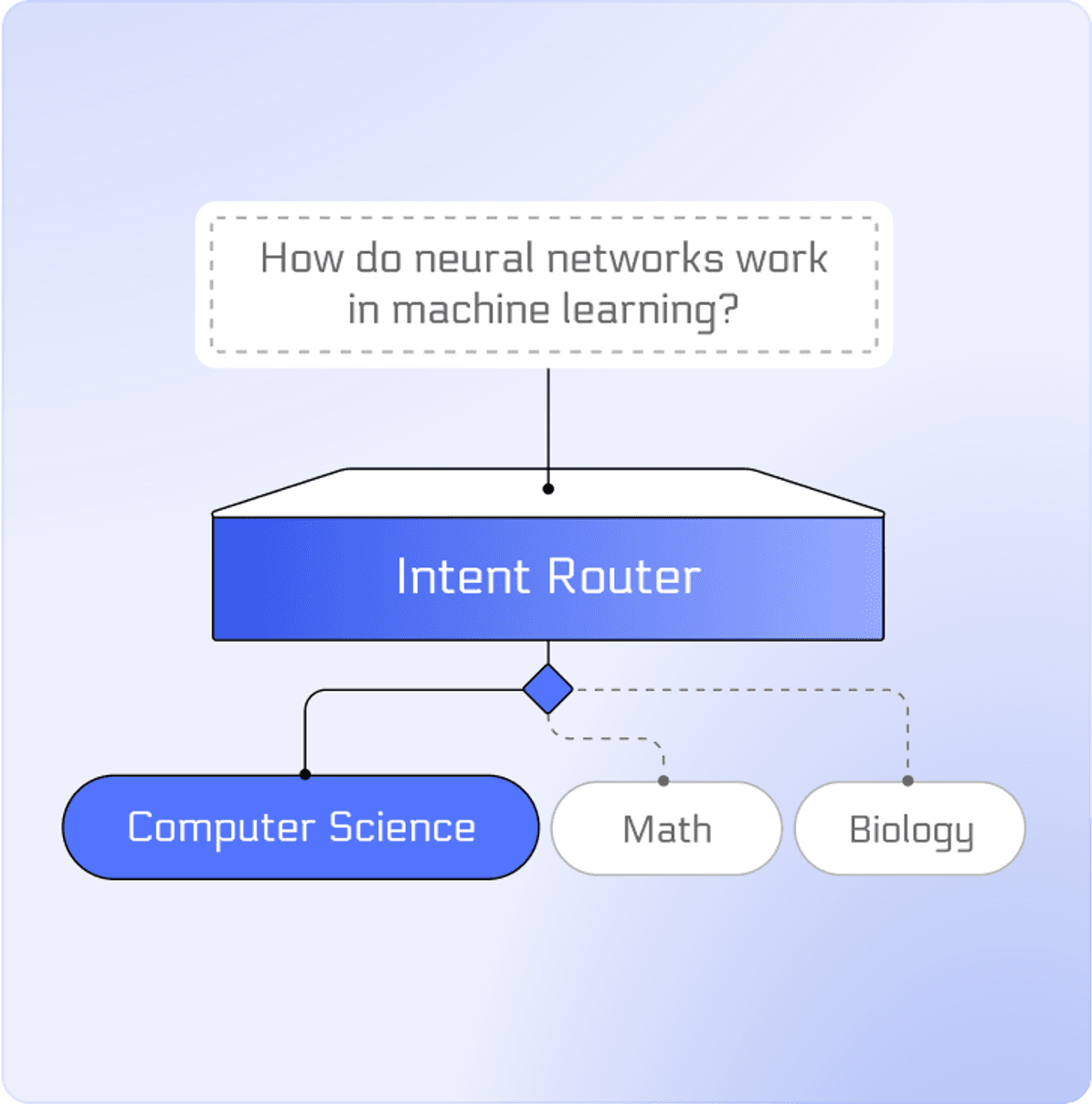

Chatbot Services: The Intent Router can guide a chatbot to provide the appropriate response based on user input. For example, consider a chatbot used in an e-commerce. The chatbot might offer 1) instructions for using the service or 2) recommend products. By implementing these as separate routes, the query “I want a refund for clothes I bought” can be routed to the “Instructions” route. The chatbot would then deliver the correct response based on predefined prompts, database access, and other logic.

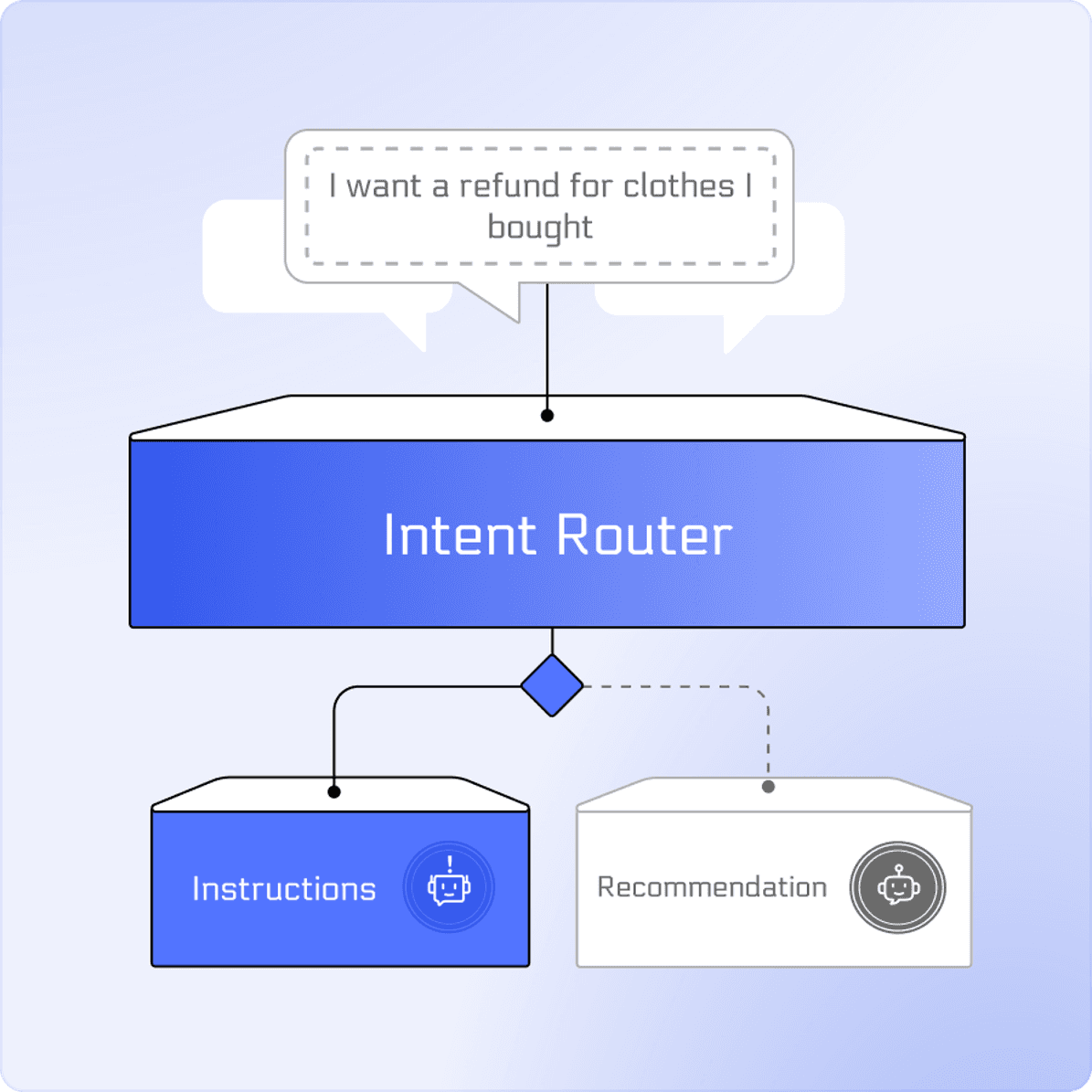

Retrieval-Augmented Generation (RAG) Services: The Intent Router can direct queries to the correct database for reference. Imagine a scenario where a user wants information about a product. If product data is stored in different databases based on category, the query “I want to know the specifications of this laptop” can be routed to the “Electronics” route, ensuring more accurate retrieval than searching through all databases.

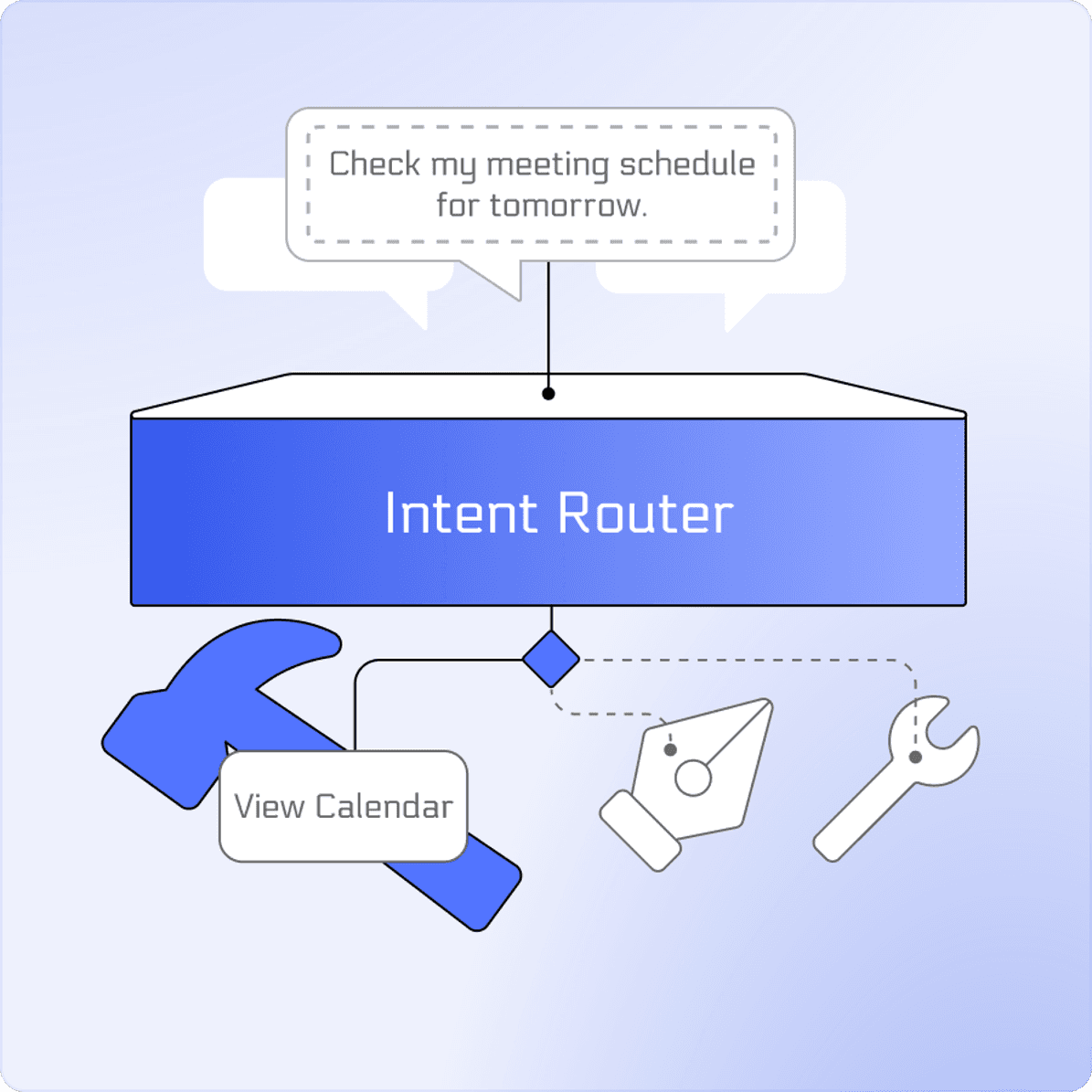

Agent Services: The Intent Router can help an agent take the appropriate action based on a query. For example, the query “Check my meeting schedule for tomorrow” might trigger the “view calendar” action among several possible actions.

Why a Lightweight Model is Essential

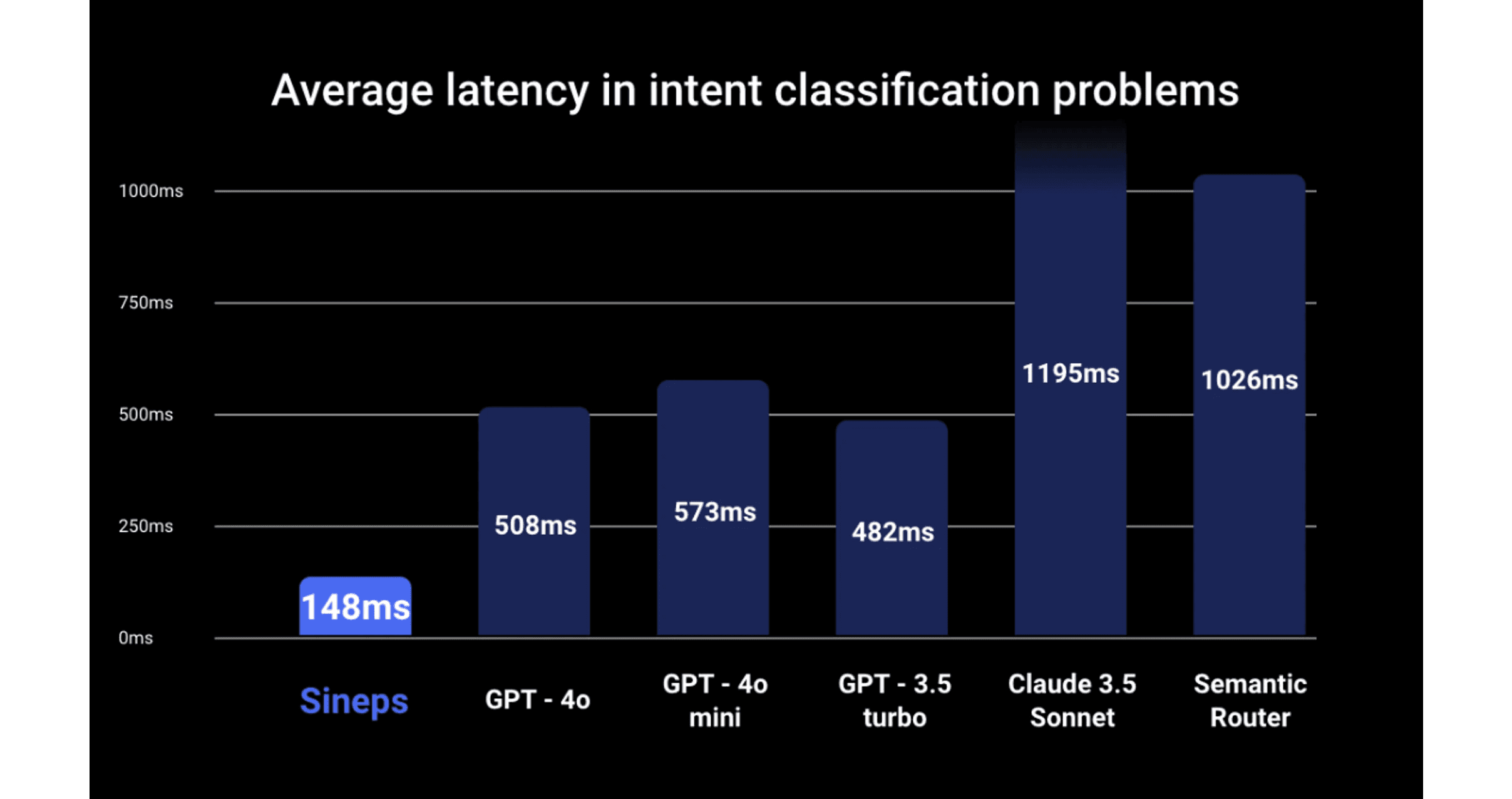

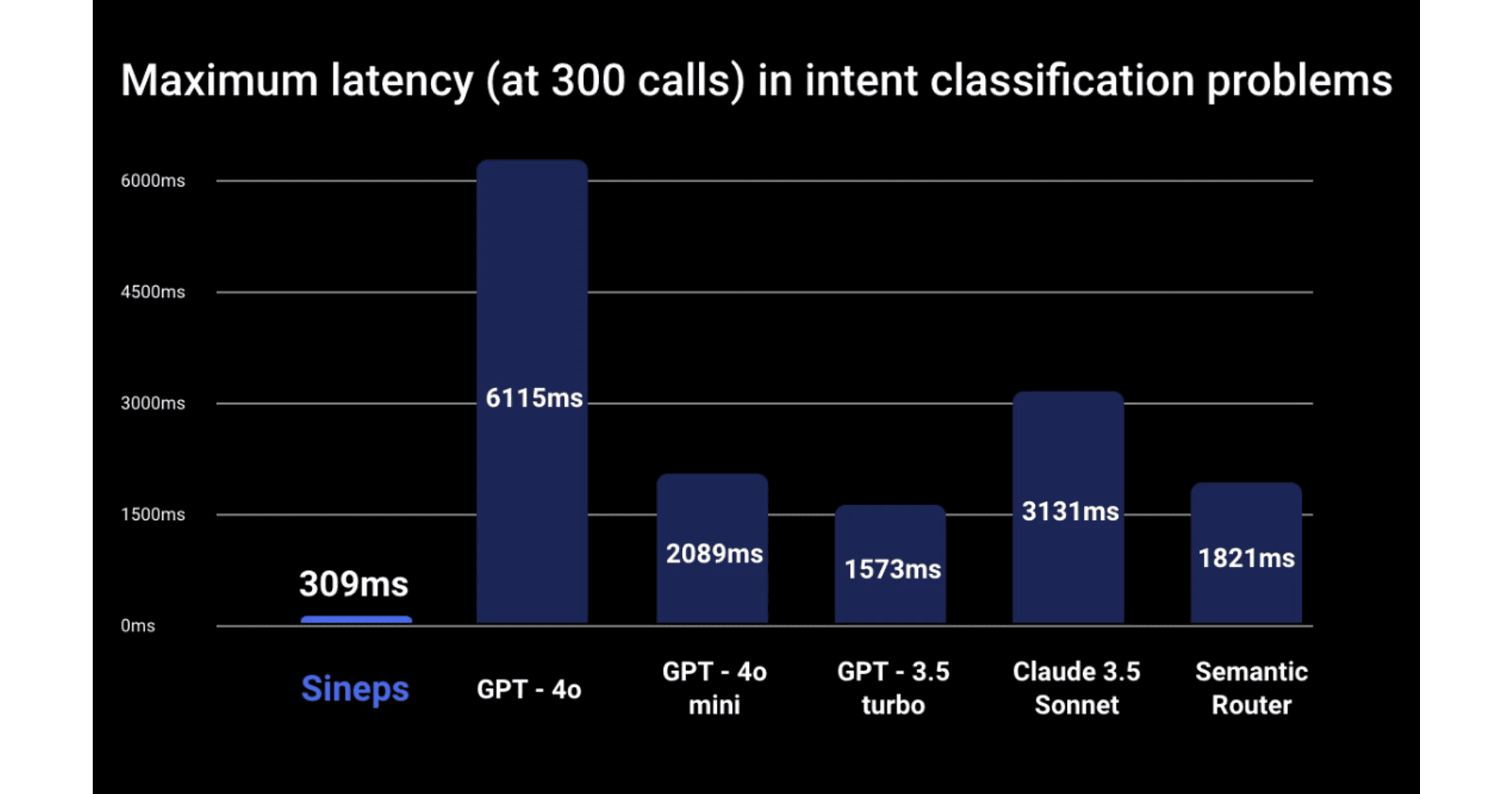

Speed is crucial in real-world services, especially in real-time applications where latency should be under 200ms. Additionally, consistent latency is important for stable services, and we found that generic LLMs can suffer from high tail latency, making them less suitable.

In experiments conducted over 300 iterations, the Intent Router was, on average, 3.4 times faster than GPT-4o, with a maximum speed improvement of 19.8 times. Compared to the recent lightweight model GPT-4o mini, the Intent Router was 3.8 times faster on average, with a maximum improvement of 6.8 times.

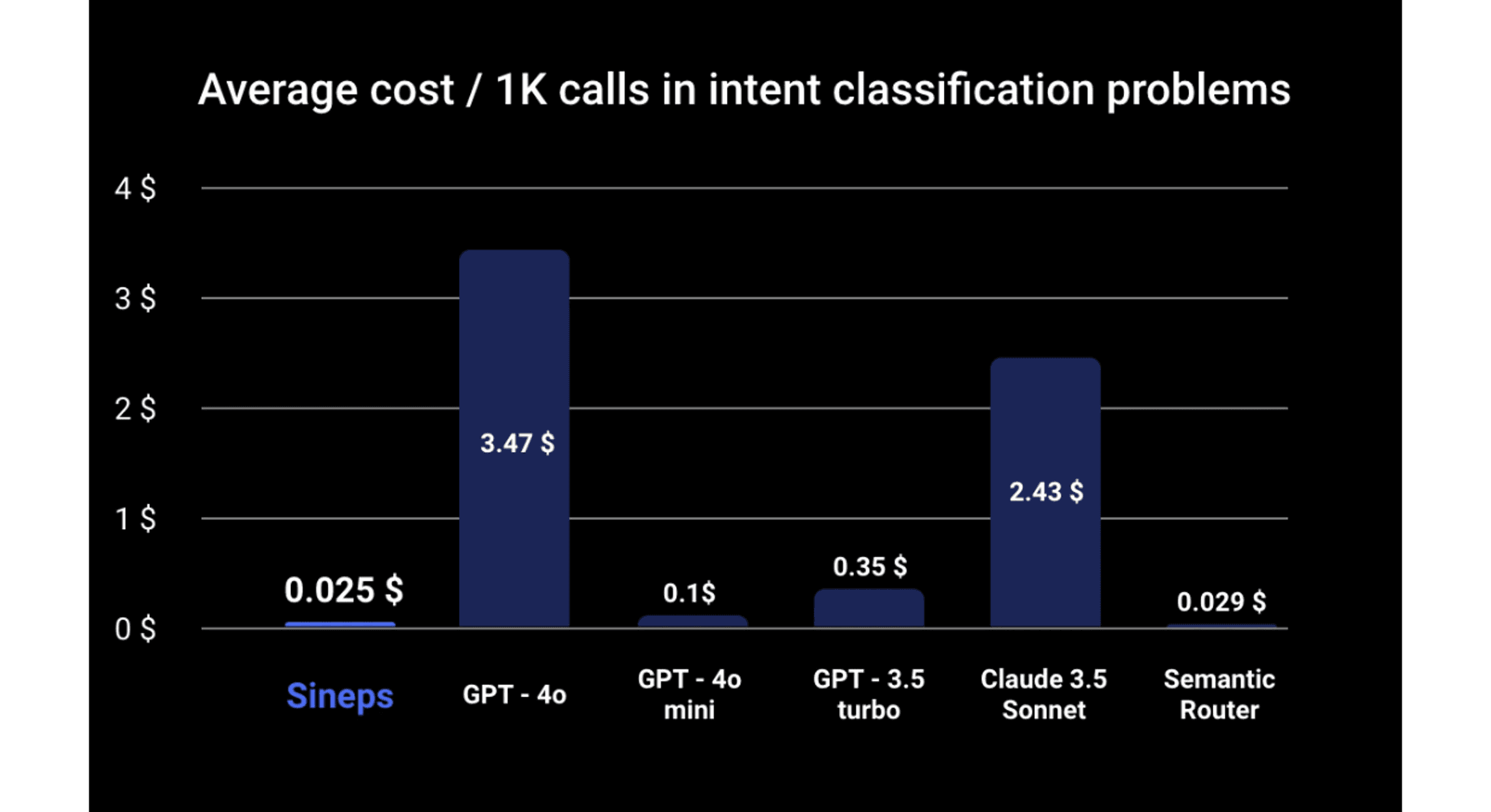

Since the model is frequently invoked, cost efficiency is also a key consideration. The Intent Router is 138 times cheaper than GPT-4o and 4 times cheaper than GPT-4o mini.

Accuracy and Capability Scope

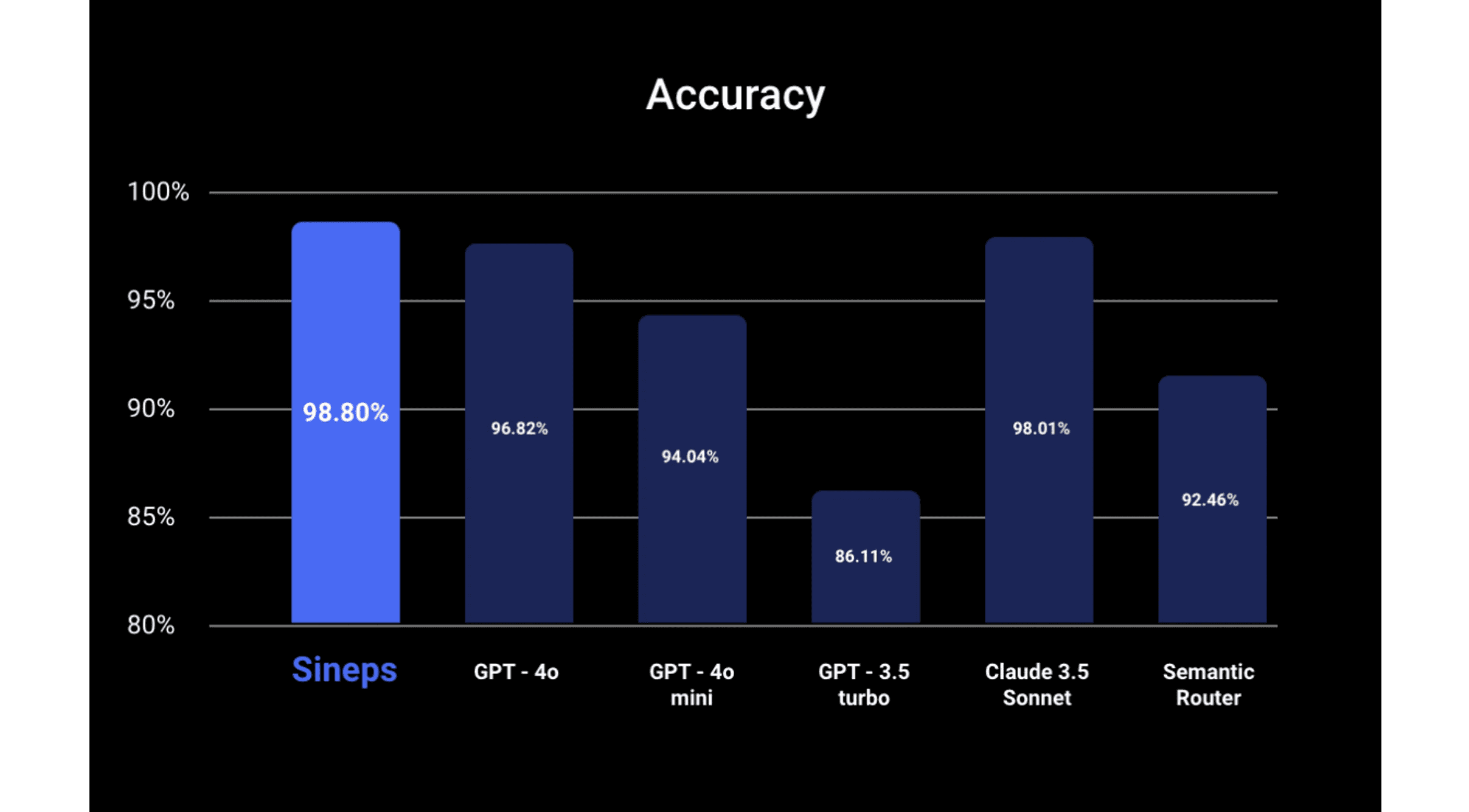

To serve as a viable alternative to generic LLMs in AI products, the Intent Router must deliver comparable accuracy. Our tests show that it performs at a similar accuracy level to GPT-4o and Claude 3.5 sonnet on our custom datasets including publicly available intent classification examples from the web. We also conducted analysis and found that most of the errors are ambiguous or due to dataset noise.

The Intent Router is trained to support multiple languages: English, Chinese, French, Korean, Spanish, Italian, and Arabic.

The Intent Router is designed for scenarios where individuals with general knowledge can consistently reach the same conclusion. For subjective decisions or planning tasks, such as “How do I feel better?”, accuracy is not guaranteed. More details can be found here.

For specialized domains or cases involving private data, fine-tuning may be required. In such cases, feel free to contact us.

Training

We trained the Intent Router using a synthetic dataset that was created to ensure the model performs well across a broad range of domains. This dataset includes a variety of characteristics to enhance the model’s generalization capabilities.

To achieve high performance in multiple languages with low inference time, the Intent Router is fine-tuned from Gemma2-2B.

API Service

To make the Intent Router easy to use, we offer it as an API. The service is powered by optimized hardware and an inference engine designed for efficient performance. Below is an example of using our API.

Try it for free!

During our Open Beta period, you can use the Intent Router for free. Even after the official launch, a trial version will continue to be available at no cost. Visit here to try the Intent Router.

Who we are

We develop solutions for cheaper, faster, and more reliable AI applications. In these days, many AI products rely on LLMs, and the demand for LLMs is only expected to grow. Generic LLMs like GPT-4o are powerful, but using them for every task is inefficient in terms of time and cost. We offer a range of tools designed to address these inefficiencies, including the Intent Router, which we introduce here. Please visit our website to explore our services.